Amazon now offers what I would veritably call “indestructable fool proof” file storage. S3 already provides an extremely high level storage durability – multiple geographically separated copies of your data. Now Amazon have taken it a step further with versioning, so even if you accidentally delete your data it’s still safe.

The amazon S3 announcement which just popped into my inbox:

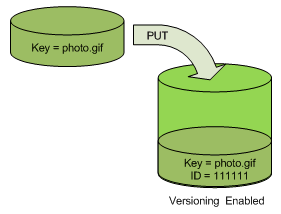

We are pleased to announce the availability of the Versioning feature for beta use across all of our Amazon S3 Regions. Versioning allows you to preserve, retrieve, and restore every version of every object in an Amazon S3 bucket. Once you enable Versioning for a bucket, Amazon S3 preserves existing objects any time you perform a PUT, POST, COPY, or DELETE operation on them. By default, GET requests will retrieve the most recently written version. Older versions of an overwritten or deleted object can be retrieved by specifying a version in the request.

You can read more about how to use versioning here.

Obviously you’ll have to pay for the extra space taken up by versions, but this looks like a really top class option for storing data that’s not regularly updated e.g. an image archive.

Also be interested to see if this spawns any new uses for Amazon web services…

You can manage Amazon S3 versioning with this free software http://www.dragondisk.com

A new feature which will help the client to recover accidentally overwritten or deleted objects. But they will be charged for every version of object stored! So, think twice before you enable the versioning setting on your Amazon S3 bucket.

Hi,

Can you tell me how much large file we can upload via this tool?

Thanks

I think it’s 5GB usually, though there’s a separate API call for storing files up to 5TB in size see

http://aws.typepad.com/aws/2010/12/amazon-s3-object-size-limit.html